|

Hi! I am Harsh Mankodiya, final-semester grad-student pursing Master’s in Computer Science at Arizona State University, specializing in Machine Learning. I am passionate about building scalable AI systems that bridge research and real-world applications. My work spans Vision Model, Multimodal Learning, Reinforcement Learning (RL), and Natural Language Processing (NLP), with a strong focus on developing adaptable AI solutions across diverse domains. I specialize in translating theoretical machine learning concepts into scalable systems, optimizing model pipelines for performance, and designing frameworks tailored to specific applications. I received my Bachelors degree in Computer Science from Nirma University. I am originally from Gujarat, India, and outside of research, I enjoy spending time taking long walks, playing video games, and socializing. Seeking full-time Machine Learning / Data Scientist opportunities for 2025. [Email] [CV] [Google Scholar] [LinkedIn] [GitHub] |

|

|

Please see my Google Scholar for a full list of work. |

|

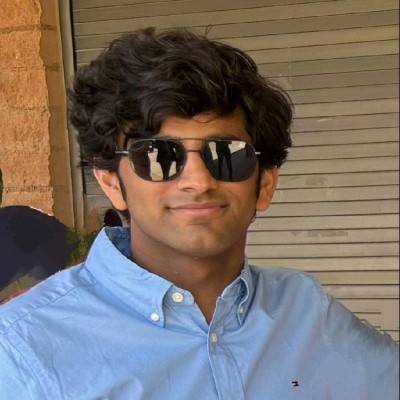

Som Sagar*, Aditya Taparia*, Harsh Mankodiya, Pranav Bidare, Yifan Zhou, Ransalu Senanayake NeurIPS Workshop on Safe & Trustworthy Agents, 2024 [PDF] We introduce BaTCAV, a Bayesian TCAV framework with uncertainty estimations that enhances the interpretability of robotic actions across both simulation platforms and real-world robotic systems. |

|

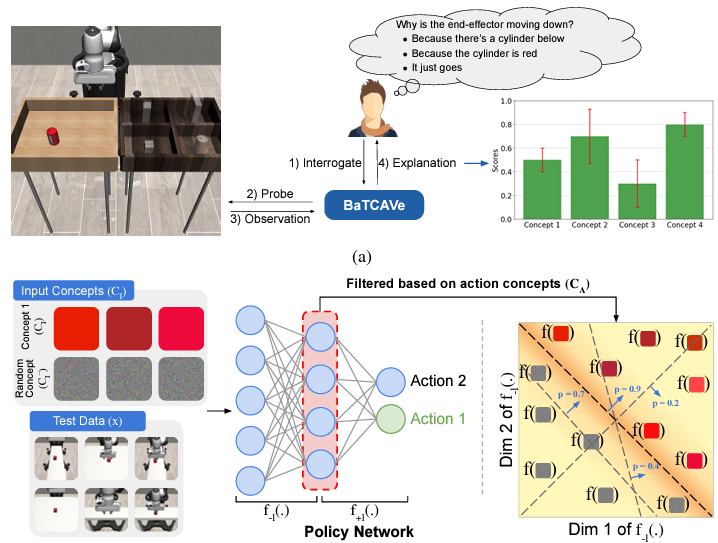

Harsh Mankodiya, Dhairya Jadav, Rajesh Gupta, Sudeep Tanwar MDPI Applied Sciences, 2024 [PDF] We propose an XAI integrated AV system that improves the explainability of semantic segmentation models, which are considered black box models and are difficult to analyze and comprehend. |

|

|

|

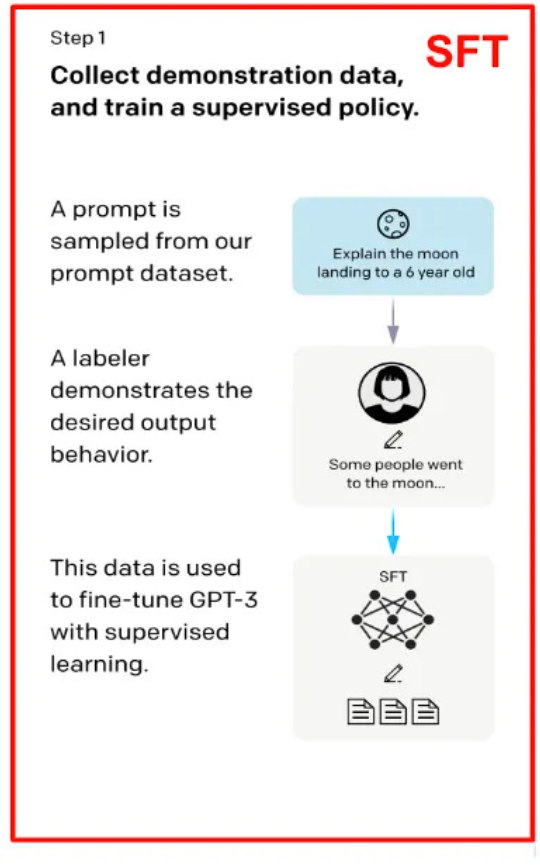

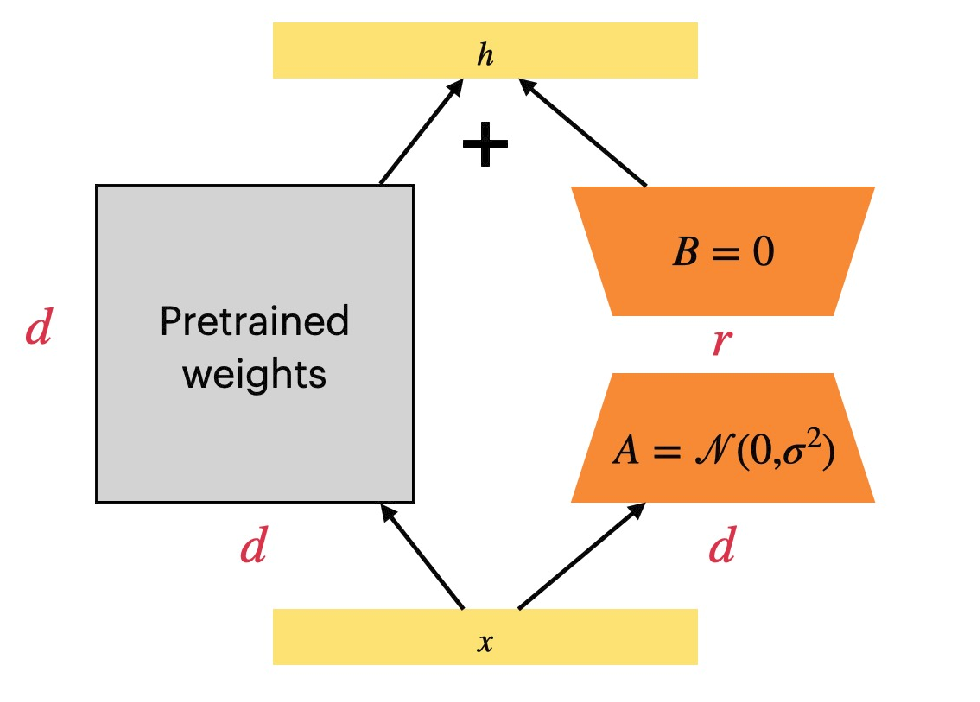

[Code] Fine-tuned LLaMA2-7B on the LIMA dataset for efficient instruction following. Trained on structured prompts covering general knowledge, reasoning, and conversational tasks. Compared performance with QLoRA, LoRA and base LLaMA2 models, demonstrating superior instruction adherence with minimal trainable parameters. |

|

[Code] Fine-tuned LLaMA2-7B using Quantized Low-Rank Adaptation (Q-LoRA) for multilingual sentiment analysis across 12 languages, achieving a 30% increase in test AUC and a 20% improvement in accuracy. Trained on datasets like IndoNLU, GoEmotions, and multilingual Amazon reviews, encompassing diverse domains such as social media, e-commerce, and movie reviews. Conducted comparative analysis with GPT-2 and BERT, showcasing LLaMA2-7B's superior performance with minimal trainable parameters. |

|

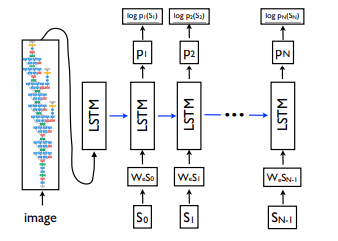

[Code] Developed a caption generation model leveraging the CLIP Vision encoder and DINOv2 transformer embeddings trained on the MS COCO Captions dataset. Integrated and fine-tuned GPT-2 decoder, on ~1% of the MS COCO dataset, which achieved a BLEU-4 score of 7%. Utilized a pre-trained GPT-2 tokenizer for efficient caption tokenization. Implemented and evaluated diverse decoding strategies, including greedy decoding and beam search, to optimize caption generation. The model demonstrated robust performance, showcasing its capability for high-quality image-to-text generation. |

|

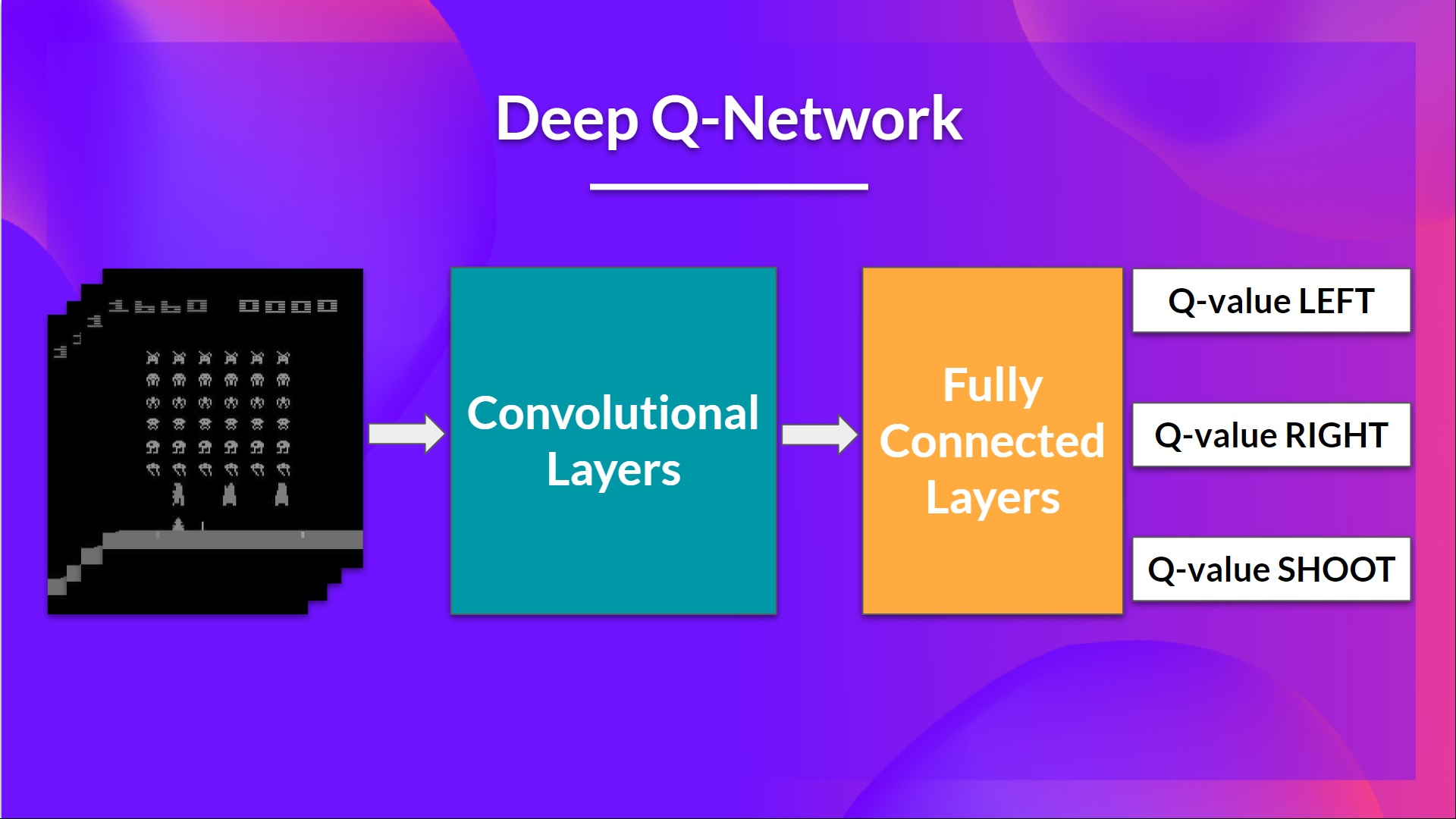

[Code] Built a Deep Q-Network (DQN) from scratch using PyTorch and OpenAI Gym, improving cumulative rewards through experience replay. Implemented epsilon-greedy exploration for efficient learning and trained a CNN-based Q-function for optimal action selection. Logged training performance and visualized reward trends. |

|

|

|

Nomic AI, NYC July 2025 - Present Developing document parsing models for complex domains and high-density datasets, advancing robust layout analysis and multimodal understanding. Building scalable evaluation pipelines and optimizing model architectures to handle diverse document structures and edge cases. |

|

Cellino Biotech, Cambridge, MA May 2024 - August 2024 Developed a PoC for a central embedding model leveraging pretrained architectures for downstreaming patch selection, anomaly detection, and segmentation, achieving an 82% F1-Score by fine-tuning DinoV2 with ViT-based heads. |

|

LENS Lab, ASU, Tempe, AZ August 2023 - May 2024 Integrated eXplainable AI with autonomous vehicle agents in simulation environments like Carla and Gymnasium. Trained PPO with VAE-based feature extraction using StableBaselines3 and employed CLIP models for zero-shot segmentation and concept sampling. |

|

Bosch (AIShield), Bengaluru, India Jan 2023 - May 2024 Formulated a novel Knowledge Distillation methodology using GradCAM for image segmentation models. Leveraged PyTorch Lightning to streamline data processing, model training, evaluation, and inference, with experiment tracking via MLFlow. Trained SegNet and U-Net segmentation models on NVIDIA DGX A100 systems, achieving high relative IoU scores exceeding 85% across multiple datasets. |

|

Samyak Infotech, Ahmedabad, India June 2022 - July 2022 Trained a BERT-based LMLayout model for business invoice information extraction, achieving an F1-Score of 81%. Designed labeling criteria, annotated 200+ invoices, integrated labels into training pipelines, and managed a surrogate SQL database for efficient data retrieval. |

|

STLabs, Nirma University, Ahmedabad, India August 2021 - May 2023 Collaborated with researchers, Ph.D. students, and undergraduates on projects in Computer Vision, Deep Learning, and Explainable AI (XAI). |